Dec. 5, 2021

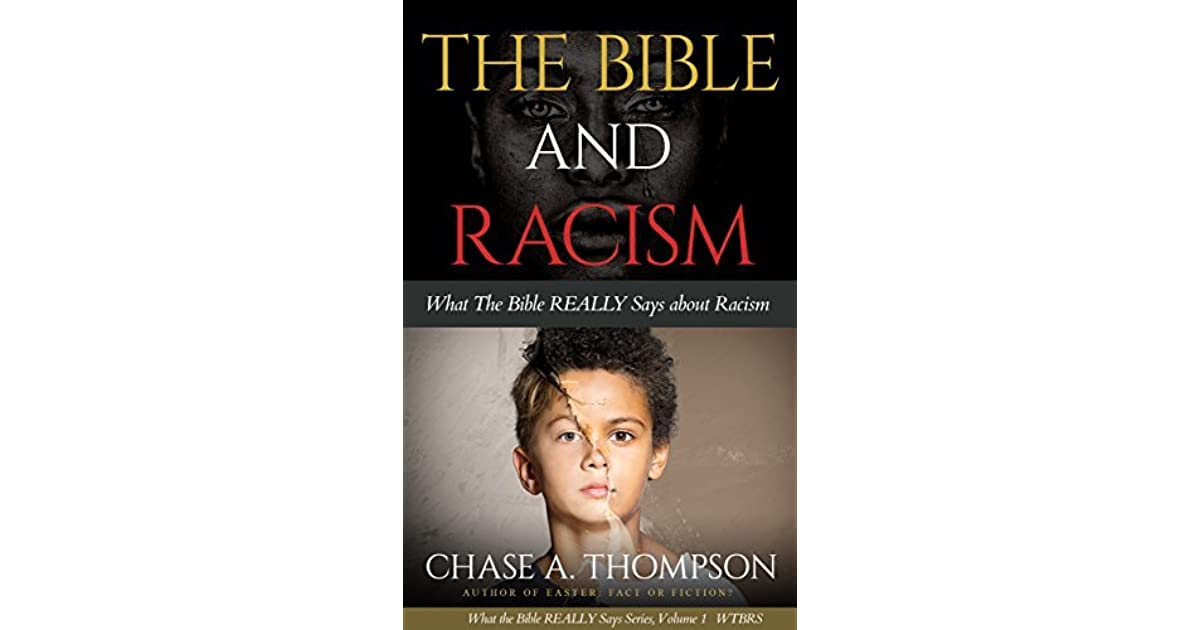

Is Christianity Primarily a White Religion? Is Christianity Mainly an American Religion? NO! Reading Revelation 5 #339. The EXTREME Follow of "Christian" Racism.

Is Christianity Mainly an American Religion? NO!

Some have used the Bible to justify racism. Others have accused the Bible of somehow supporting racism. What's the truth of the matter - does the Bible teach that Christianity is to be a white person religion only? Is Christianity to be an American religion only. NO, NO, NO!